I’ve been experimenting heavily with container based virtualisation since about 2016 when I first learnt about Docker and how to use it. From that point onward I have experimented heavily with containers both at home and at work. I’ve build a fair amount of containers for work but my use of containers in my home environment started around 2017 when I figured out how to get them running on my NAS and started experimenting with services such as NextCloud and Home Assistant. Over the next few years I built a small collection of services that I ran 247 using Docker and it worked surprisingly well.

It wasn’t until about 2020 that the old NAS got replaced with a new HP Micro-server NAS device which had a bit more processing power. At this point I considered what I was running on the old NAS and decided I’d like to try learning something different to manage the ever growing collection of containers. I’d previously experimented with Docker Swarm but at this point it time it seemed to be declining in popularity so instead I decided I’d try out Kubernetes.

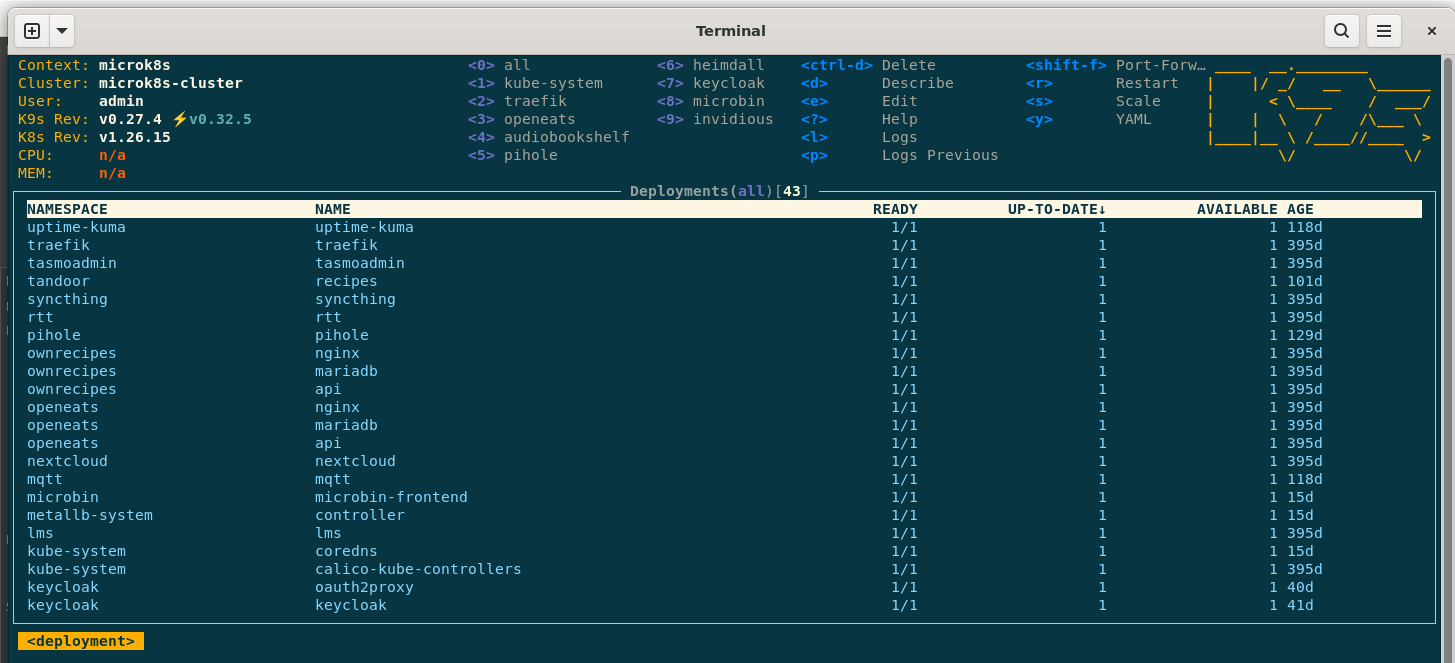

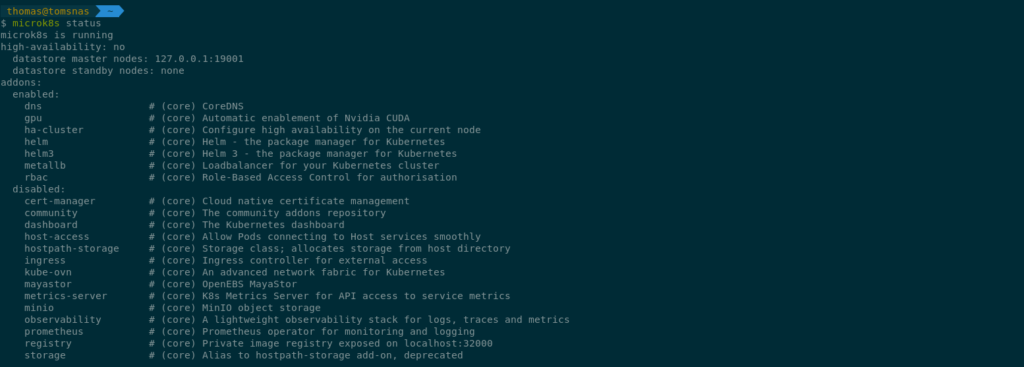

I began reading and watching videos on Kubernetes to learn the basics. After I felt confident enough in the basics, I fired up Microk8s and I began to build up a Kubernetes cluster. I started with Microk8s as the research I’d done pointed to it being one of the ways of getting a production-ish cluster running without much hassle. I’d avoided distributions designed only for local testing such as minikube as I wanted to use what I had built on the NAS without having to switch to a different distribution half way through.

It didn’t take long before I had a cluster running. With the help of the Kubernetes dashboard and CLI, I inspected what I had and ran the usual echo server just to give it a test. All was working as it should.

Previously in Docker I’d just used a multitude of different ports for services but I wanted this new system to be done properly with a load balancer. I soon got Traefik working in the cluster and hooked it up to LetsEncrypt via DNS validation so it could mint certificates on my domain to ensure https could be used even if the containerised service didn’t support https.

At this time, I had a flat network with a single subnet so relied on the router firewall to enforce access to the NAS services via the different ports. As everything is now running behind Traefik and I didn’t want to use non-standard ports for https traffic, I could no longer firewall access per service. Therefore, my next step was to install MetalLB to allow Traefik and some services to run on dedicated IPs so the firewall could again be used to restrict access to the more critical services.

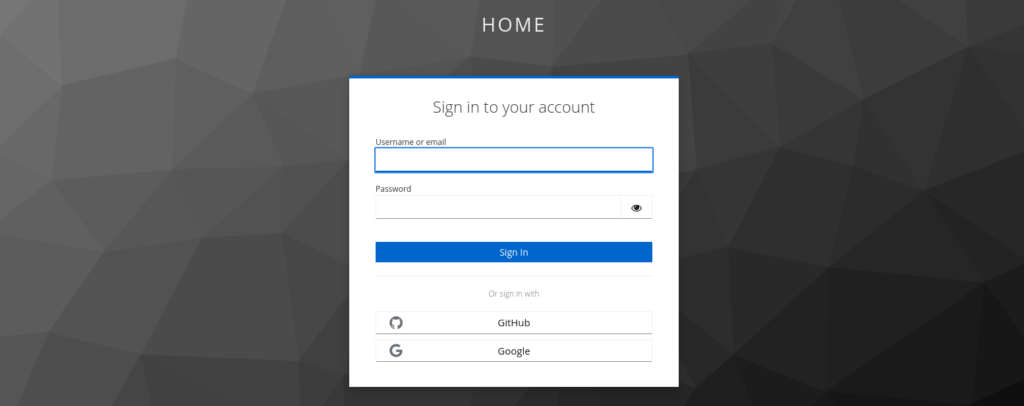

Eventually, the flat network was changed and I also moved away from IP based access control to using KeyCloak and OAuth2 Proxy to manage access via 3rd party SSO providers.

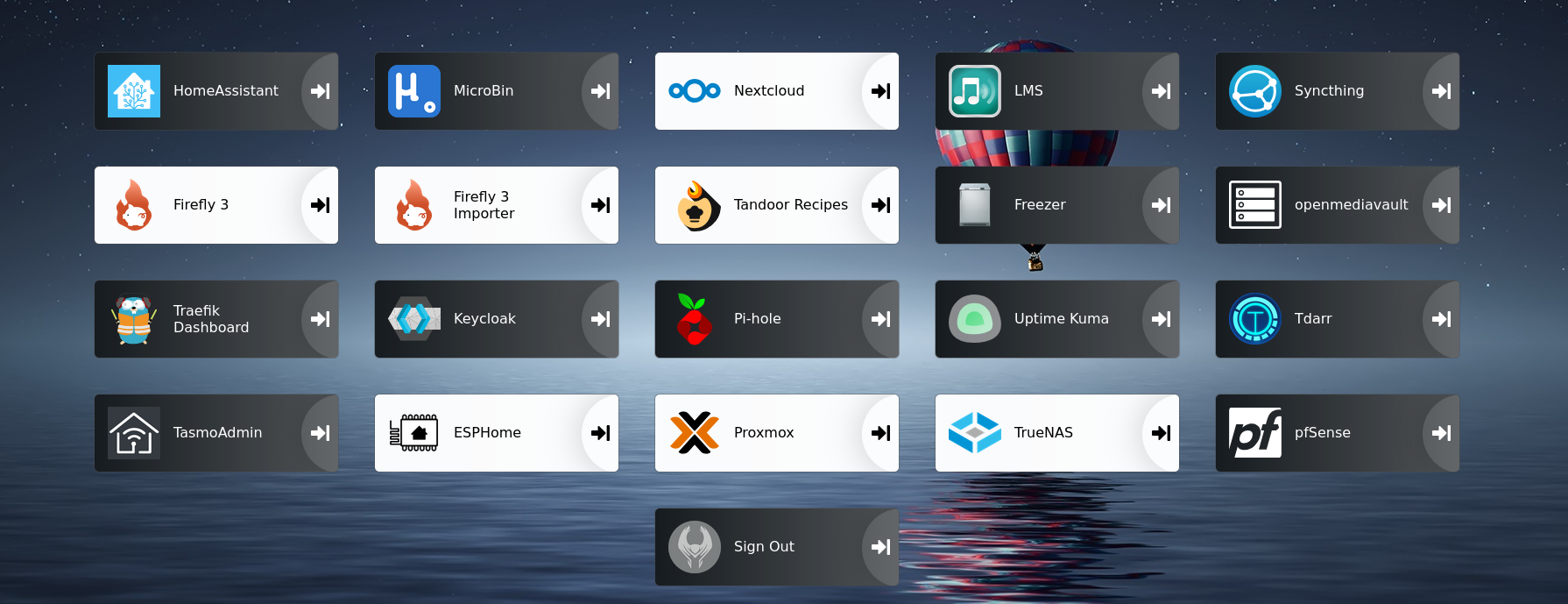

Everything else running in the cluster was just simple containers and deployment configurations. The most effort was spent converting services that only provided a Docker or Docker Compose file rather than a way to deploy in Kubernetes but that just required some YAML wrangling. For those curious, some of the services that I now run include:

- Pihole DNS – for blocking ad traffic for devices on my network (and enforcing pihole for some of the devices that try to use other DNS providers).

- Tandor – for recipe management.

- Home Assistant & MQTT – for home automation.

- Syncthing – for syncing across my various devices.

- Firefly3 – for managing my finances.

- LMS – for handling multi-room music playback.

- Uptime Kuma – for monitoring my services for downtime.

- Octoprint – for managing my 3D printer

As for keeping the containers up to date, I developed a very light deployment script that checks for changes in GitHub and if any are found it’ll update the cluster automatically. Coupled with Renovate and PRs on the GitHub repo and I automatically get notified when a new version is available and in some cases these updates are applied automatically.

Overall for my use-case, I probably didn’t need anything as heavyweight as Kubernetes. Something like Rancher probably would have been absolutely fine and not require me to learn a whole new technology stack but where is the fun in that? It has certainly been an interesting learning experience and I don’t regret jumping in head first to it and would absolutely do it again if I had the choice. As for the Kubernetes cluster, it’s still running and seems pretty stable so I cannot complain.